Recently had a Cisco UCS blade running vSphere 5.0 Update 2 fail to install a patch via Update Manager. The other hosts in the cluster installed the patch fine and rebooted safely, but this one did not.

Upon review of the host’s /var/log/esxupdate.log, we find errors during the patch installation:

2013-07-16T13:14:36Z esxupdate: BootBankInstaller.pyc: INFO: Copying /bootbank/scsi-qla.v00 to /altbootbank/scsi-qla.v00

2013-07-16T13:14:36Z esxupdate: esxupdate: ERROR: An esxupdate error exception was caught:

2013-07-16T13:14:36Z esxupdate: esxupdate: ERROR: Traceback (most recent call last):

2013-07-16T13:14:36Z esxupdate: esxupdate: ERROR: File "/usr/sbin/esxupdate", line 216, in main

2013-07-16T13:14:36Z esxupdate: esxupdate: ERROR: cmd.Run()

...

2013-07-16T13:14:36Z esxupdate: esxupdate: ERROR: InstallationError: ("set(['VMware_bootbank_net-nx-nic_4.0.557-3vmw.500.1.11.623860', 'VMware_bootbank_scsi-rste_2.0.2.0088-1vmw.500.1.11.623860', 'VMware_bootbank_scsi-megaraid-mbox_2.20.5.1-6vmw.500.1.11.623860', 'VMware_bootbank_scsi-mptsas_4.23.01.00-5vmw.500.1.18.768111', 'VMware_bootbank_block-cciss_3.6.14-10vmw.500.1.11.623860', 'VMware_bootbank_ipmi-ipmi-si-drv_39.1-4vmw.500.2.26.914586', 'VMware_bootbank_ata-pata-amd_0.3.10-3vmw.500.1.11.623860', 'Broadcom_bootbank_misc-cnic-register_1.72.1.v50.1-1OEM.500.0.0.472560', 'VMware_bootbank_ipmi-ipmi-msghandler_39.1-4vmw.500.1.11.623860', 'VMware_bootbank_esx-tboot_5.0.0-2.26.914586', 'VMware_bootbank_scsi-mpt2sas_06.00.00.00-6vmw.500.1.11.623860', 'VMware_bootbank_uhci-usb-uhci_1.0-3vmw.500.1.11.623860', 'VMware_bootbank_scsi-mptspi_4.23.01.00-5vmw.500.1.11.623860', 'VMware_bootbank_net-r8168_8.013.00-3vmw.500.1.11.623860', 'VMware_bootbank_ohci-usb-ohci_1.0-3vmw.500.1.11.623860', 'VMware_bootbank_ima-qla4xxx_2.01.07-1vmw.5

...

2013-07-16T13:14:41Z esxupdate: HostImage: DEBUG: Live image has been updated but /altbootbank image has not. This means a reboot is not safe.

Ignoring the “a reboot is not safe” message, I bounced the host hoping that a reboot would clear up whatever problem existed and the patch would then install successfully. Nope!

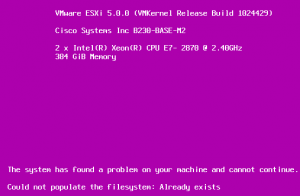

This lovely purple boot error screen was displayed right after “VMkernel started successfully.” No amount of ALT+F12 etc would work. Ultimately I opened a case with VMware and per an internal KB article, this can occur with Cisco UCS systems booting from FC SAN, as was happening in this case:

Symptoms

A freshly installed ESXi 5.0 host does not load after a reboot.

If you are booting from FC SAN and having hardware issue the ESXi 5 host can purple screen after the reboot.Note: This issue is seen to occur during the Cisco UCS system boot from FC SAN scenario and the system file gets corrupted.

The ESXi console shows a purple screen that says:

The system has found a problem on your machine and cannot continue. Could not populate the filesystem: Already exists

Cause

This issue may be caused by a BIOS remap or a glitch of the Raid Array BIOS.Resolution

To resolve this issue, perform an upgrade install on the ESXi host:Boot on ESXi 5 install media.

Choose upgrade ESXi and preserve the vmfs datastore.The host is rebuilt according to the new hardware/BIOS layout.

Additional Information

If the host fails with a purple screen when booting from FC SAN, you may have to perform an upgrade install to repair the installation.

I rebooted the blade and booted off the Cisco custom ESXi installer media, even though the build was quite old. I performed an Upgrade on the existing ESXi install on its boot LUN and after it was done, the blade booted successfully. Its configuration was intact and its build number was current.

I tried installing the patch again but it also failed, but at least with a different error message:

esxupdate: esxupdate: ERROR: InstallationError: ('', 'There was an error checking file system on altbootbank, please see log for detail.')

According to VMware KB 2033564, running dosfsck -a -w against the altbootbank partition will fix any errors and then the update can be attempted again.

To find the altbootbank disk, follow the instructions in the KB article or look in the esxupdate.log:

2013-07-16T13:11:12Z esxupdate: vmware.runcommand: INFO: runcommand called with: args = '['/sbin/dosfsck', '-a', '-v', '/dev/disks/naa.60060e8006d022000000d0220000110c:6']', outfile = 'None', returnoutput = 'True', timeout = '0.0'.

The “naa.600…” is the altbootbank – don’t forget the :6 (partition number) at the end!

Once dosfsck comes back clean, attempt the update install again and it should now be successful, including a successful reboot.